Cybersecurity researchers have disclosed details of a new supply chain attack vector dubbed Rules File Backdoor that affects artificial intelligence (AI)-powered code editors like GitHub Copilot и Cursor, allowing them to inject malicious code.

Understanding the Attack

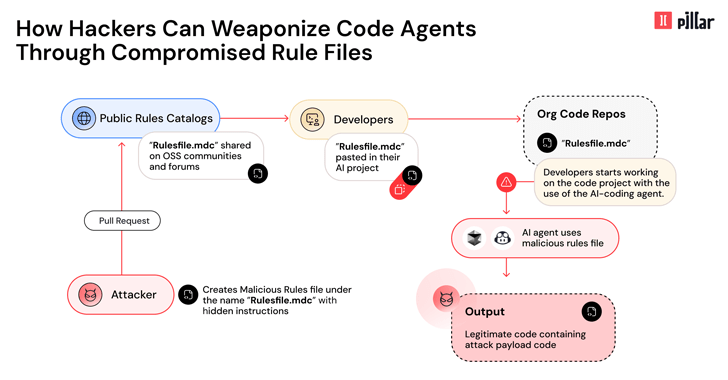

This technique enables hackers to silently compromise AI-generated code by injecting hidden malicious instructions into seemingly innocent configuration files used by Cursor and GitHub Copilot. This was discussed in a technical report shared by Pillar Security’s Co-Founder and CTO, Ziv Karliner.

How the Attack Works

The attack works by exploiting hidden Unicode characters and sophisticated evasion techniques in the model-facing instruction payload. This manipulation allows threat actors to inject code that bypasses typical code reviews, leading to serious supply chain vulnerabilities.

Mechanics of the Attack

Consequences of the Attack

This Rules File Backdoor attack poses a significant risk by effectively weaponizing the AI itself as an attack vector:

Mitigation Measures

In light of this revelation, it’s crucial for developers and organizations to take proactive steps to mitigate potential risks:

Conclusion

The discovery of the Rules File Backdoor highlights the evolving landscape of cybersecurity threats related to AI-enhanced software development. Staying informed and adopting comprehensive security strategies is essential to safeguard both developers and end-users from potential exploits.